My Content Creation Workflow in Hugo

Since I ported my site to a static site using Hugo in late March, I’ve been ironing out the details on how to most efficiently create and post content to the site. I’ve gone through a few iterations of the process, and I thought I would share what I’ve found to be a relatively painless process, plus a couple of thoughts on what could be improved.

Static Site Generators (SSG) v. Content Management Systems (CMS)

For those who have not used both of these systems, I’ll go over some high level differences between SSG and CMS. I will share some basic points on content creation processes with CMS, and then I will jump into what I am doing with my current site in Hugo.

First, SSG are systems that (generally) run locally on a content creator’s computer or a shared staging site and enable assembling a variety of content into a form that the SSG consumes and uses to generate a static web site that can then be deployed to a live web server computer. CMS, on the other hand, are generally applications deployed on the live web server where content creators add cotent directly to the system, using workflows in the application to manage the full lifecycle of content, from creation and deployment to archiving. The CMS generally manages serving the content, while in a static site generated by an SSG, content may be served by any web server software.

While none of these statements is a hard and fast rule, they are good general rules about how these systems differ. There are many more details of SSG and CMS that distinguish them from eah other, and plenty of similarities, but at a high level this is a good overview of what each system does.

Content Workflow Using Hugo

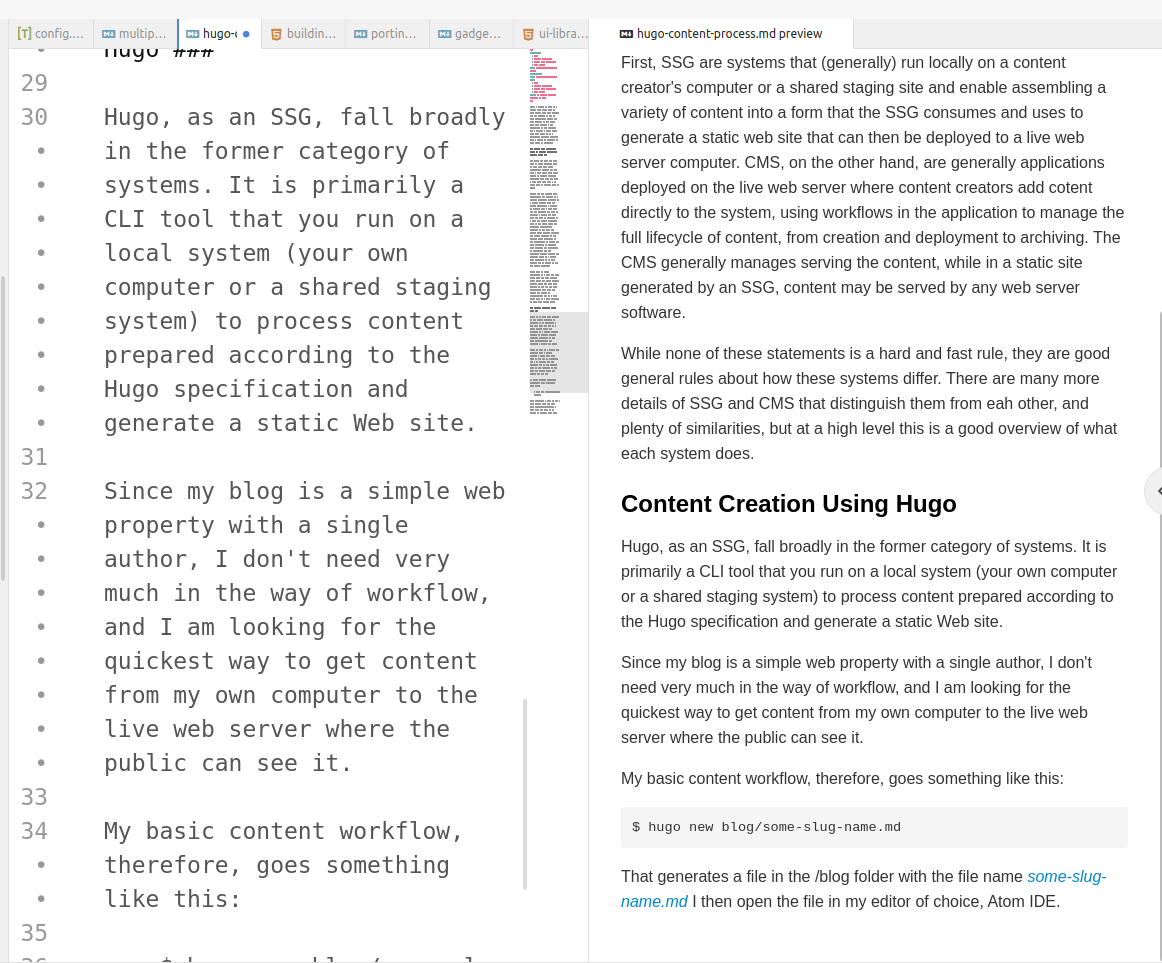

Hugo, as an SSG, fall broadly in the former category of systems. It is primarily a CLI tool that you run on a local system (your own computer or a shared staging system) to process content prepared according to the Hugo specification and generate a static Web site.

Since my blog is a simple web property with a single author, I don’t need very much in the way of workflow, and I am looking for the quickest way to get content from my own computer to the live web server where the public can see it.

My basic content workflow, therefore, goes something like this:

$ hugo new blog/some-slug-name.md

That generates a file in the /blog folder with the file name some-slug-name.md I then open the file in my editor of choice, Atom IDE. Atom has a convenient preview window that shows what the Markdown you type in will look like on the way out. Why someone doesn’t just turn this preview window into a full-fledged WYSIWYG Markdown editor I don’t understand, but it works well enough and responsiveness is not a problem.

After authoring the content and adding any images I need to the /static/images/blog folder, I do a quick check in /config.toml for the baseURL variable of the site. Since I run a local version of the site, I have two baseURL lines, one for local deployment and the other for live deployment. If I want to check the content first, I use the local baseURL, if not, I use the live baseURL.

#config.toml

baseURL = "https://robertmunn.com/"

#baseURL = "http://robertmunn.local/"

Then I run:

$ hugo

This command takes all of the layout, templates, and content of the site and turns it into a static web site in the /public folder of the project root where all of the Hugo stuff lives. So the content is generated, but I still need to get it to the live web server.

Next, I compress the contents of the /public folder into an archive and FTP it to a folder on the web server. If I don’t have any new imagees to add for the new post, I my delete the images folder to save space and make the transfer faster, but that’s an optional step.

Lastly, I’ve written a shell script on the (Linux) web server to decompress the archive and deploy it live. To run that script, I log in remotely to the web server using SSH and run the script. Generally the process takes a couple of minutes.

Improving the Workflow

As you can see, there is very little to my workflow, so there isn’t necessarily a lot of room for improvement. I’ve been thinking about whether I want to use a folder on my system for deployment and automatically sync that folder to the web server’s content folder.

Automating the deployment of the content to the server certainly makes sense in some respects. It saves time and reduces the number of steps in the workflow, and that makes the process easier. On the downside, with each process that I automate, I remove a potential place where a mistake can be caught before deployment. Since I’m the sole author of the site, I do not have the same concerns as a large content team authoring a big cmmercial site, but I’d still like thing to be right when they land on the server.

I will probably automate the deployment process, but I’m thinking about how I proceed first- not because it is that important in and of itself, but because as a professional I try to employ the same practices I recommend and provide for clients.